Product analytics

This example notebook shows how you can easily analyze the basics of product analytics. It’s also available as a full Jupyter notebook to run on your own data (see how to get started in your notebook), or you can instead run Objectiv Up to try it out. The dataset used here is the same as in Up.

Get started

We first have to instantiate the model hub and an Objectiv DataFrame object.

# set the timeframe of the analysis

start_date = '2022-04-01'

start_date_recent = '2022-07-01'

end_date = None

from modelhub import ModelHub, display_sql_as_markdown

from datetime import datetime

# instantiate the model hub and set the default time aggregation to daily

# and set the global contexts that will be used in this example

modelhub = ModelHub(time_aggregation='%Y-%m-%d', global_contexts=['application'])

# get a Bach DataFrame with Objectiv data within a defined timeframe

df_full = modelhub.get_objectiv_dataframe(start_date=start_date, end_date=end_date)

df = modelhub.get_objectiv_dataframe(start_date=start_date_recent, end_date=end_date)

The location_stack column, and the columns taken from the global contexts, contain most of the

event-specific data. These columns are JSON typed, and we can extract data from it using the keys of the JSON

objects with SeriesLocationStack methods, or the context

accessor for global context columns. See the open taxonomy example for how to use

the location_stack and global contexts.

df_full['application_id'] = df_full.application.context.id

df_full['feature_nice_name'] = df_full.location_stack.ls.nice_name

df_full['root_location'] = df_full.location_stack.ls.get_from_context_with_type_series(type='RootLocationContext', key='id')

df['application_id'] = df.application.context.id

df['feature_nice_name'] = df.location_stack.ls.nice_name

df['root_location'] = df.location_stack.ls.get_from_context_with_type_series(type='RootLocationContext', key='id')

Have a look at the data

# sort by users sessions

df.sort_values(['session_id', 'session_hit_number'], ascending=False).head()

day moment user_id location_stack event_type stack_event_types session_id session_hit_number application application_id feature_nice_name root_location

event_id

17f50b0a-2aa9-46bc-9610-efe8d4240577 2022-07-30 2022-07-30 18:59:16.074 8a2fbdc0-f7af-4a3a-a6da-95220fc8e36a [{'id': 'home', '_type': 'RootLocationContext', '_types': ['AbstractContext', 'AbstractLo... VisibleEvent [AbstractEvent, NonInteractiveEvent, VisibleEvent] 1137 1 [{'id': 'objectiv-website', '_type': 'ApplicationContext', '_types': ['AbstractContext', ... objectiv-website Overlay: star-us-notification-overlay located at Root Location: home => Pressable: star-u... home

03081098-94f7-490e-9f94-bee37aa78270 2022-07-30 2022-07-30 18:26:50.260 1f7afff0-bde4-44da-9fa6-a2d6b0289373 [{'id': 'home', '_type': 'RootLocationContext', '_types': ['AbstractContext', 'AbstractLo... VisibleEvent [AbstractEvent, NonInteractiveEvent, VisibleEvent] 1136 1 [{'id': 'objectiv-website', '_type': 'ApplicationContext', '_types': ['AbstractContext', ... objectiv-website Overlay: star-us-notification-overlay located at Root Location: home => Pressable: star-u... home

ed409433-8ca4-417b-a9fd-007e5dd4388c 2022-07-30 2022-07-30 15:14:24.087 136b4cc0-ead0-47ae-9c87-55be86027e4f [{'id': 'home', '_type': 'RootLocationContext', '_types': ['AbstractContext', 'AbstractLo... VisibleEvent [AbstractEvent, NonInteractiveEvent, VisibleEvent] 1135 1 [{'id': 'objectiv-website', '_type': 'ApplicationContext', '_types': ['AbstractContext', ... objectiv-website Overlay: star-us-notification-overlay located at Root Location: home => Pressable: star-u... home

306d1fce-6cae-4956-844a-64000288ca5a 2022-07-30 2022-07-30 14:38:03.785 8d69b136-7db4-4a89-88e7-ba562fcd1f04 [{'id': 'home', '_type': 'RootLocationContext', '_types': ['AbstractContext', 'AbstractLo... ApplicationLoadedEvent [AbstractEvent, ApplicationLoadedEvent, NonInteractiveEvent] 1134 1 [{'id': 'objectiv-website', '_type': 'ApplicationContext', '_types': ['AbstractContext', ... objectiv-website Root Location: home home

825f6ab6-4499-4bca-a38f-a9828a0cd17e 2022-07-30 2022-07-30 13:44:58.251 102a4b16-8123-4f06-94a1-2e7e4787ebbe [{'id': 'about', '_type': 'RootLocationContext', '_types': ['AbstractContext', 'AbstractL... PressEvent [AbstractEvent, InteractiveEvent, PressEvent] 1133 4 [{'id': 'objectiv-website', '_type': 'ApplicationContext', '_types': ['AbstractContext', ... objectiv-website Link: blog located at Root Location: about => Navigation: navbar-top about

Next we’ll go though a selection of product analytics metrics. We can use models from the open model hub, or use modeling library Bach to run data analyses directly on the data store, with Pandas-like syntax.

For each example, head(), to_pandas() or to_numpy() can be used to execute the generated SQL and get the results in your notebook.

Unique users

Let’s see the number of unique users over time, with the

unique_users model. By default

it will use the time_aggregation set when the model hub was instantiated, in this case ‘%Y-%m-%d’, so daily.

For monthly_users, the default time_aggregation is overridden by using a different groupby argument.

# unique users, monthly

monthly_users = modelhub.aggregate.unique_users(df_full, groupby=modelhub.time_agg(df_full, '%Y-%m'))

monthly_users.sort_index(ascending=False).head()

time_aggregation

2022-07 830

2022-06 497

2022-05 1682

2022-04 304

Name: unique_users, dtype: int64

# unique users, daily

daily_users = modelhub.aggregate.unique_users(df)

daily_users.sort_index(ascending=False).head(10)

time_aggregation

2022-07-30 9

2022-07-29 28

2022-07-28 17

2022-07-27 78

2022-07-26 102

2022-07-25 102

2022-07-24 75

2022-07-23 80

2022-07-22 81

2022-07-21 43

Name: unique_users, dtype: int64

To see the number of users per main product section, group by its root_location.

# unique users, per main product section

users_root = modelhub.aggregate.unique_users(df, groupby=['application_id', 'root_location'])

users_root.sort_index(ascending=False).head(10)

application_id root_location

objectiv-website privacy 4

join-slack 15

jobs 48

home 683

blog 87

about 52

objectiv-docs tracking 89

taxonomy 99

modeling 95

home 156

Name: unique_users, dtype: int64

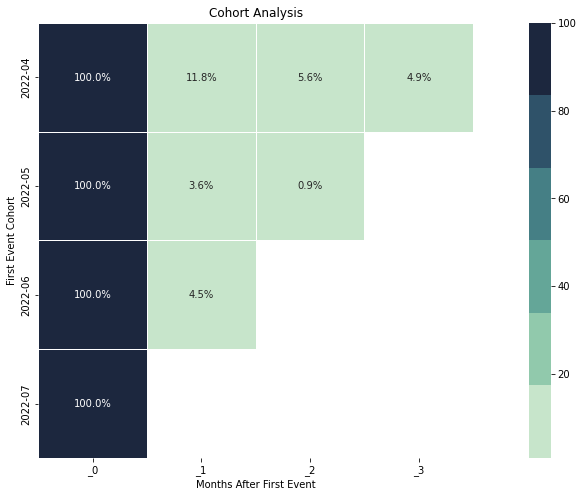

Retention

To measure how well we are doing at keeping users with us after their first interaction, we can use a retention matrix.

To calculate the retention matrix, we need to distribute the users into mutually exclusive cohorts based on

the time_period (can be daily, weekly, monthly, or yearly) they first interacted.

In the retention matrix:

- each row represents a cohort;

- each column represents a time range, where time is calculated with respect to the cohort start time;

- the values of the matrix elements are the number or percentage (depending on

percentageparameter) of users in a given cohort that returned again in a given time range.

The users’ activity starts to be counted from the start_date specified when the modelhub was instantiated.

# retention matrix, monthly, with percentages

retention_matrix = modelhub.aggregate.retention_matrix(df_full, time_period='monthly', percentage=True, display=True)

retention_matrix.head()

_0 _1 _2 _3

first_cohort

2022-04 100.0 11.842105 5.592105 4.934211

2022-05 100.0 3.584447 0.850547 NaN

2022-06 100.0 4.513064 NaN NaN

2022-07 100.0 NaN NaN NaN

Drilling down retention cohorts

In the retention matrix above, we can see there’s a strong drop in retained users in the second cohort the next month. We can directly zoom into the different cohorts and see the difference.

# calculate the first cohort

cohorts = df_full[['user_id', 'moment']].groupby('user_id')['moment'].min().reset_index()

cohorts = cohorts.rename(columns={'moment': 'first_cohort'})

# add first cohort of the users to our DataFrame

df_with_cohorts = df_full.merge(cohorts, on='user_id')

# filter data where users belong to the #0 cohort

cohort0_filter = (df_with_cohorts['first_cohort'] > datetime(2022, 4, 1)) & (df_with_cohorts['first_cohort'] < datetime(2022, 5, 1))

df_with_cohorts[cohort0_filter]['event_type'].value_counts().head()

event_type

VisibleEvent 3712

PressEvent 2513

ApplicationLoadedEvent 1794

MediaLoadEvent 601

HiddenEvent 259

Name: value_counts, dtype: int64

# filter data where users belong to the #1 cohort (the problematic one)

cohort1_filter = (df_with_cohorts['first_cohort'] > datetime(2022, 5, 1)) & (df_with_cohorts['first_cohort'] < datetime(2022, 6, 1))

df_with_cohorts[cohort1_filter]['event_type'].value_counts().head()

event_type

VisibleEvent 5074

PressEvent 4661

ApplicationLoadedEvent 2642

MediaLoadEvent 1360

HiddenEvent 688

Name: value_counts, dtype: int64

One interesting thing to note here, for example, is that there are relatively less VisibleEvents in the first cohort than in the second ‘problematic’ one.

This is just a simple example to demonstrate the differences you can find between cohorts. You could run other models like top product features, or develop more in-depth analyses.

Duration

Here we calculate the average duration of a user’s session, using the session_duration model.

# duration, monthly average

duration_monthly = modelhub.aggregate.session_duration(df_full, groupby=modelhub.time_agg(df_full, '%Y-%m'))

duration_monthly.sort_index(ascending=False).head()

time_aggregation

2022-07 0 days 00:04:39.795993

2022-06 0 days 00:02:54.086814

2022-05 0 days 00:02:58.417140

2022-04 0 days 00:03:02.069818

Name: session_duration, dtype: timedelta64[ns]

# duration, daily average

duration_daily = modelhub.aggregate.session_duration(df)

duration_daily.sort_index(ascending=False).head()

time_aggregation

2022-07-30 0 days 00:00:34.376800

2022-07-29 0 days 00:06:46.552000

2022-07-28 0 days 00:03:00.315214

2022-07-27 0 days 00:02:52.322250

2022-07-26 0 days 00:04:25.374743

Name: session_duration, dtype: timedelta64[ns]

To see the average time spent by users in each main product section (per month in this case), group by its root_location.

# duration, monthly average per root_location

duration_root_daily = modelhub.aggregate.session_duration(df, groupby=['application_id', 'root_location', modelhub.time_agg(df, '%Y-%m-%d')]).sort_index()

duration_root_daily.head(10)

application_id root_location time_aggregation

objectiv-docs home 2022-07-01 0 days 00:00:07.585500

2022-07-02 0 days 00:00:00.266000

2022-07-03 0 days 00:01:14.708000

2022-07-04 0 days 00:00:25.144000

2022-07-05 0 days 00:00:06.662100

2022-07-06 0 days 00:00:53.232100

2022-07-07 0 days 00:02:07.500500

2022-07-08 0 days 00:02:16.212818

2022-07-09 0 days 00:00:50.589000

2022-07-10 0 days 00:00:00.085000

Name: session_duration, dtype: timedelta64[ns]

# how is the overall time spent distributed?

session_duration = modelhub.aggregate.session_duration(df, groupby='session_id', exclude_bounces=False)

# materialization is needed because the expression of the created Series contains aggregated data, and it is not allowed to aggregate that.

session_duration.materialize().quantile(q=[0.25, 0.50, 0.75]).head()

quantile

0.25 0 days 00:00:00

0.50 0 days 00:00:00.883000

0.75 0 days 00:00:55.435000

Name: session_duration, dtype: timedelta64[ns]

Top used product features

To see which features are most used, we can use the top_product_features model.

# see top used product features - by default we select only user actions (InteractiveEvents)

top_product_features = modelhub.aggregate.top_product_features(df)

top_product_features.head()

user_id_nunique

application feature_nice_name event_type

objectiv-website Link: about-us located at Root Location: home => Navigation: navbar-top PressEvent 56

Link: spin-up-the-demo located at Root Location: home => Content: hero PressEvent 45

Link: docs located at Root Location: home => Navigation: navbar-top PressEvent 34

Pressable: hamburger located at Root Location: home => Navigation: navbar-top PressEvent 29

Link: browse-on-github located at Root Location: home => Content: hero PressEvent 27

Top used features per product area

We also want to look at which features were used most in our top product areas.

# select only user actions, so stack_event_types must contain 'InteractiveEvent'

interactive_events = df[df.stack_event_types.json.array_contains('InteractiveEvent')]

# from these interactions, get the number of unique users per application, root_location, feature, and event type.

top_interactions = modelhub.agg.unique_users(interactive_events, groupby=['application_id','root_location','feature_nice_name', 'event_type'])

top_interactions = top_interactions.reset_index()

# let's look at the homepage on our website

home_users = top_interactions[(top_interactions.application_id == 'objectiv-website') & (top_interactions.root_location == 'home')]

home_users.sort_values('unique_users', ascending=False).head()

application_id root_location feature_nice_name event_type unique_users

0 objectiv-website home Link: about-us located at Root Location: home => Navigation: navbar-top PressEvent 56

1 objectiv-website home Link: spin-up-the-demo located at Root Location: home => Content: hero PressEvent 45

2 objectiv-website home Link: docs located at Root Location: home => Navigation: navbar-top PressEvent 34

3 objectiv-website home Pressable: hamburger located at Root Location: home => Navigation: navbar-top PressEvent 29

4 objectiv-website home Link: browse-on-github located at Root Location: home => Content: hero PressEvent 27

From the same top_interactions object, we can see the top used features on our documentation, which is a separate application.

# see the top used features on our documentation application

docs_users = top_interactions[top_interactions.application_id == 'objectiv-docs']

docs_users.sort_values('unique_users', ascending=False).head()

application_id root_location feature_nice_name event_type unique_users

0 objectiv-docs home Link: quickstart-guide located at Root Location: home => Navigation: docs-sidebar PressEvent 25

1 objectiv-docs home Link: get-a-launchpad located at Root Location: home => Navigation: docs-sidebar PressEvent 18

2 objectiv-docs home Link: introduction located at Root Location: home => Navigation: docs-sidebar PressEvent 18

3 objectiv-docs modeling Link: tracking located at Root Location: modeling => Navigation: navbar-top PressEvent 17

4 objectiv-docs tracking Link: logo located at Root Location: tracking => Navigation: navbar-top PressEvent 17

Conversions

Users have impact on product goals, e.g. conversion to a signup. Here we look at their conversion to such goals. First you define a conversion event, which in this example we’ve defined as clicking a link to our GitHub repo.

# create a column that extracts all location stacks that lead to our GitHub repo

df['github_press'] = df.location_stack.json[{'id': 'objectiv-on-github', '_type': 'LinkContext'}:]

df.loc[df.location_stack.json[{'id': 'github', '_type': 'LinkContext'}:]!=[],'github_press'] = df.location_stack

# define which events to use as conversion events

modelhub.add_conversion_event(location_stack=df.github_press, event_type='PressEvent', name='github_press')

This conversion event can then be used by several models using the defined name (‘github_press’). First we calculate the number of unique converted users.

# number of conversions, daily

df['is_conversion_event'] = modelhub.map.is_conversion_event(df, 'github_press')

conversions = modelhub.aggregate.unique_users(df[df.is_conversion_event])

conversions.to_frame().sort_index(ascending=False).head(10)

unique_users

time_aggregation

2022-07-28 1

2022-07-27 1

2022-07-26 3

2022-07-25 1

2022-07-24 1

2022-07-21 1

2022-07-20 2

2022-07-18 2

2022-07-17 1

2022-07-15 1

Conversion rate

To calculate the daily conversion rate, we use the earlier created daily_users DataFrame.

# conversion rate, daily

conversion_rate = conversions / daily_users

conversion_rate.sort_index(ascending=False).head(10)

time_aggregation

2022-07-30 NaN

2022-07-29 NaN

2022-07-28 0.058824

2022-07-27 0.012821

2022-07-26 0.029412

2022-07-25 0.009804

2022-07-24 0.013333

2022-07-23 NaN

2022-07-22 NaN

2022-07-21 0.023256

Name: unique_users, dtype: float64

# combined DataFrame with #conversions + conversion rate, daily

conversions_plus_rate = conversions.to_frame().merge(conversion_rate.to_frame(), on='time_aggregation', how='left').sort_index(ascending=False)

conversions_plus_rate = conversions_plus_rate.rename(columns={'unique_users_x': 'converted_users', 'unique_users_y': 'conversion_rate'})

conversions_plus_rate.head()

converted_users conversion_rate

time_aggregation

2022-07-28 1 0.058824

2022-07-27 1 0.012821

2022-07-26 3 0.029412

2022-07-25 1 0.009804

2022-07-24 1 0.013333

Features before conversion

We can calculate what users did before converting.

# features used before users converted

top_features_before_conversion = modelhub.agg.top_product_features_before_conversion(df, name='github_press')

top_features_before_conversion.head()

unique_users

application feature_nice_name event_type

objectiv-website Pressable: hamburger located at Root Location: home => Navigation: navbar-top PressEvent 3

objectiv-docs Link: tracking located at Root Location: taxonomy => Navigation: navbar-top PressEvent 3

Link: get-started-in-your-notebook located at Root Location: modeling => Navigation: docs-... PressEvent 2

Link: basic-user-intent-analysis located at Root Location: modeling => Navigation: docs-si... PressEvent 2

Link: core-concepts located at Root Location: modeling => Navigation: docs-sidebar => Expa... PressEvent 2

Exact features that converted

Let’s understand which product features actually triggered the conversion.

# features that triggered the conversion

conversion_locations = modelhub.agg.unique_users(df[df.is_conversion_event], groupby=['application_id', 'feature_nice_name', 'event_type'])

conversion_locations.sort_values(ascending=False).to_frame().head()

unique_users

application_id feature_nice_name event_type

objectiv-website Link: github located at Root Location: home => Navigation: navbar-top PressEvent 8

objectiv-docs Link: github located at Root Location: tracking => Navigation: navbar-top PressEvent 4

objectiv-website Link: github located at Root Location: blog => Navigation: navbar-top PressEvent 2

objectiv-docs Link: github located at Root Location: modeling => Navigation: navbar-top PressEvent 2

objectiv-website Link: github located at Root Location: home => Navigation: navbar-top => Overlay: hamburge... PressEvent 2

Time spent before conversion

Finally, let’s see how much time converted users spent before they converted.

# label sessions with a conversion

df['converted_users'] = modelhub.map.conversions_counter(df, name='github_press') >= 1

# label hits where at that point in time, there are 0 conversions in the session

df['zero_conversions_at_moment'] = modelhub.map.conversions_in_time(df, 'github_press') == 0

# filter on above created labels

converted_users = df[(df.converted_users & df.zero_conversions_at_moment)]

# how much time do users spend before they convert?

modelhub.aggregate.session_duration(converted_users, groupby=None).to_frame().head()

session_duration

0 0 days 00:05:59.359188

- bach.SeriesJson.json

- modelhub.ModelHub.add_conversion_event

- modelhub.Map.is_conversion_event

- modelhub.Aggregate.unique_users

- bach.Series.to_frame

- bach.DataFrame.sort_index

- bach.DataFrame.head

- modelhub.Aggregate.top_product_features_before_conversion

- modelhub.Map.conversions_counter

- modelhub.Map.conversions_in_time

- modelhub.Aggregate.session_duration

Get the SQL for any analysis

The SQL for any analysis can be exported with one command, so you can use models in production directly to simplify data debugging & delivery to BI tools like Metabase, dbt, etc. See how you can quickly create BI dashboards with this.

# show the underlying SQL for this dataframe - works for any dataframe/model in Objectiv

display_sql_as_markdown(monthly_users)

WITH "manual_materialize___cac8db8d9436fd6876febbcca93c5e00" AS (

SELECT "event_id" AS "event_id",

"day" AS "day",

"moment" AS "moment",

"cookie_id" AS "user_id",

cast("value"->>'_type' AS text) AS "event_type",

cast(cast("value"->>'_types' AS text) AS JSONB) AS "stack_event_types",

cast(cast("value"->>'location_stack' AS text) AS JSONB) AS "location_stack",

cast(cast("value"->>'time' AS text) AS bigint) AS "time",

jsonb_path_query_array(cast(cast("value"->>'global_contexts' AS text) AS JSONB), '$[*] ? (@._type == $type)', '{"type":"ApplicationContext"}') AS "application"

FROM "data"

),

"getitem_where_boolean___15c0640d971e6c8907dd66f5cc011790" AS (

SELECT "event_id" AS "event_id",

"day" AS "day",

"moment" AS "moment",

"user_id" AS "user_id",

"event_type" AS "event_type",

"stack_event_types" AS "stack_event_types",

"location_stack" AS "location_stack",

"time" AS "time",

"application" AS "application"

FROM "manual_materialize___cac8db8d9436fd6876febbcca93c5e00"

WHERE ((("day" >= cast('2022-04-01' AS date))) AND (("day" <= cast('2022-07-30' AS date))))

),

"context_data___97f08e249a30951af2632534e7560f01" AS (

SELECT "event_id" AS "event_id",

"day" AS "day",

"moment" AS "moment",

"user_id" AS "user_id",

"location_stack" AS "location_stack",

"event_type" AS "event_type",

"stack_event_types" AS "stack_event_types",

"application" AS "application"

FROM "getitem_where_boolean___15c0640d971e6c8907dd66f5cc011790"

),

"session_starts___50df85d9c667478e9f2f32087a280170" AS (

SELECT "event_id" AS "event_id",

"day" AS "day",

"moment" AS "moment",

"user_id" AS "user_id",

"location_stack" AS "location_stack",

"event_type" AS "event_type",

"stack_event_types" AS "stack_event_types",

"application" AS "application",

CASE WHEN (extract(epoch FROM (("moment") - (lag("moment", 1, cast(NULL AS timestamp WITHOUT TIME ZONE)) OVER (PARTITION BY "user_id" ORDER BY "moment" ASC NULLS LAST, "event_id" ASC NULLS LAST RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW)))) <= cast(1800 AS bigint)) THEN cast(NULL AS boolean)

ELSE cast(TRUE AS boolean)

END AS "is_start_of_session"

FROM "context_data___97f08e249a30951af2632534e7560f01"

),

"session_id_and_count___1ef0a194f924a63b68c6f407eebf85a8" AS (

SELECT "event_id" AS "event_id",

"day" AS "day",

"moment" AS "moment",

"user_id" AS "user_id",

"location_stack" AS "location_stack",

"event_type" AS "event_type",

"stack_event_types" AS "stack_event_types",

"application" AS "application",

"is_start_of_session" AS "is_start_of_session",

CASE WHEN "is_start_of_session" THEN row_number() OVER (PARTITION BY "is_start_of_session" ORDER BY "moment" ASC NULLS LAST, "event_id" ASC NULLS LAST RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW)

ELSE cast(NULL AS bigint)

END AS "session_start_id",

count("is_start_of_session") OVER (ORDER BY "user_id" ASC NULLS LAST, "moment" ASC NULLS LAST, "event_id" ASC NULLS LAST RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW) AS "is_one_session"

FROM "session_starts___50df85d9c667478e9f2f32087a280170"

),

"objectiv_sessionized_data___89a326e7d7da328e5707d8ba93091e67" AS (

SELECT "event_id" AS "event_id",

"day" AS "day",

"moment" AS "moment",

"user_id" AS "user_id",

"location_stack" AS "location_stack",

"event_type" AS "event_type",

"stack_event_types" AS "stack_event_types",

"application" AS "application",

"is_start_of_session" AS "is_start_of_session",

"session_start_id" AS "session_start_id",

"is_one_session" AS "is_one_session",

first_value("session_start_id") OVER (PARTITION BY "is_one_session" ORDER BY "moment" ASC NULLS LAST, "event_id" ASC NULLS LAST RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW) AS "session_id",

row_number() OVER (PARTITION BY "is_one_session" ORDER BY "moment" ASC NULLS LAST, "event_id" ASC NULLS LAST RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW) AS "session_hit_number"

FROM "session_id_and_count___1ef0a194f924a63b68c6f407eebf85a8"

) SELECT to_char("moment", 'YYYY"-"MM') AS "time_aggregation",

count(DISTINCT "user_id") AS "unique_users"

FROM "objectiv_sessionized_data___89a326e7d7da328e5707d8ba93091e67"

GROUP BY to_char("moment", 'YYYY"-"MM')

That’s it! Join us on Slack if you have any questions or suggestions.

Next Steps

Play with this notebook in Objectiv Up

Spin up a full-fledged product analytics pipeline with Objectiv Up in under 5 minutes, and play with this example notebook yourself.

Use this notebook with your own data

You can use the example notebooks on any dataset that was collected with Objectiv’s tracker, so feel free to use them to bootstrap your own projects. They are available as Jupyter notebooks on our GitHub repository. See instructions to set up the Objectiv tracker.

Check out related example notebooks

Marketing Analytics notebook - analyze the above metrics and more for users coming from marketing efforts.

Funnel Discovery notebook - analyze the paths that users take that impact your product goals.