In this release, we made it possible to quickly build BI dashboards with common product & marketing analytics metrics, using the pre-built data models from the open model hub. Examples of these models are unique users, session duration, retention, top used features, funnel discovery, user behavior prediction, and more.

To suit your specific needs, the models work with any subset of your data (e.g. limiting to converted users), can be customized with parameters (e.g. calculating either absolute numbers or ratios), and can easily be combined with other models.

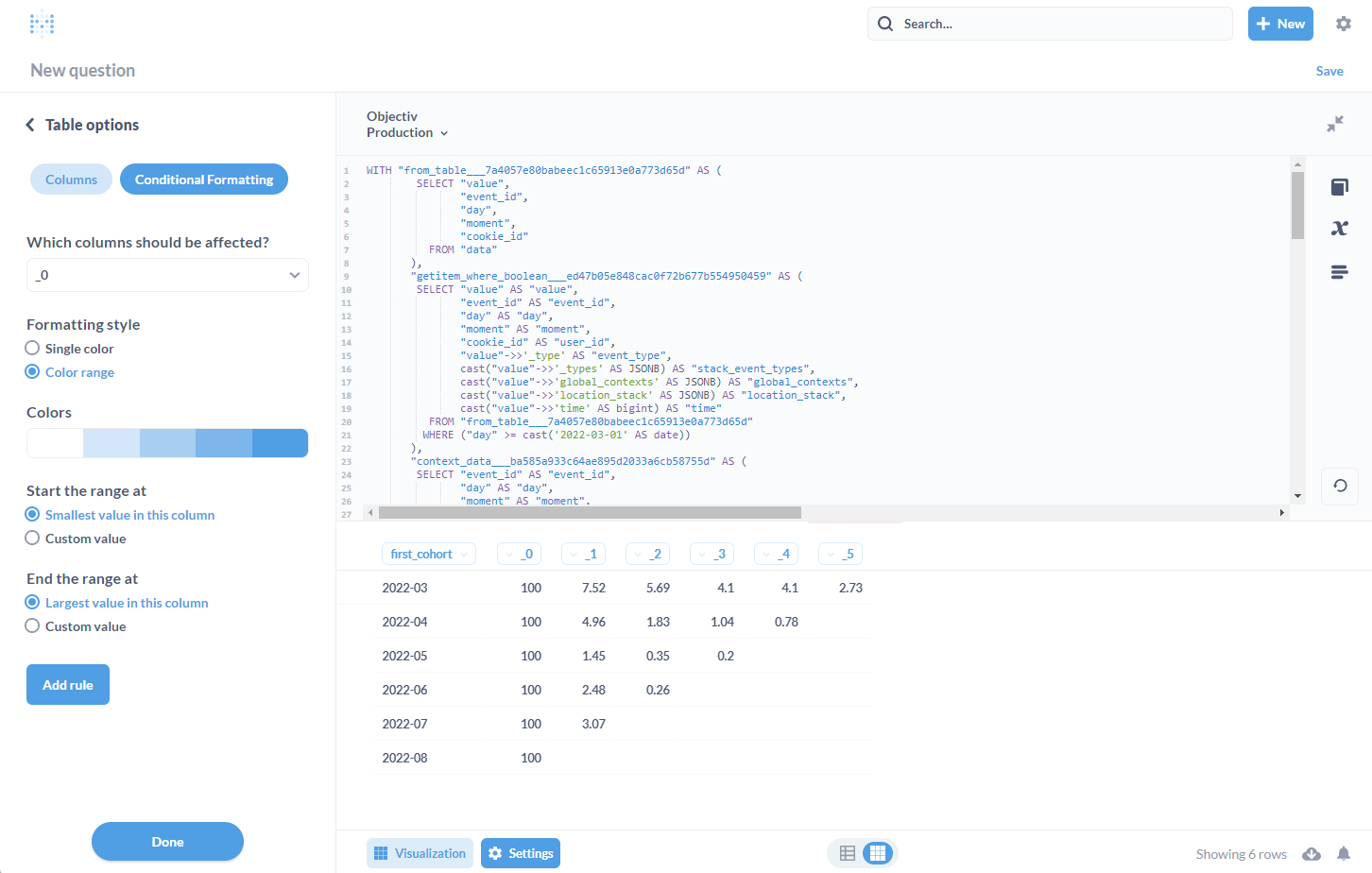

Once your analysis is ready, exporting it to (human-readable) SQL is just one view_sql()

operation. See below for an example retention analysis built with the retention_matrix model.

print(retention.view_sql())

# or to display markdown-format: display_sql_as_markdown(retention)

Resulting in:

WITH "from_table___7a4057e80babeec1c65913e0a773d65d" AS (

SELECT "value",

"event_id",

"day",

"moment",

"cookie_id"

FROM "data"

),

"getitem_where_boolean___ed47b05e848cac0f72b677b554950459" AS (

SELECT "value" AS "value",

"event_id" AS "event_id",

"day" AS "day",

"moment" AS "moment",

"cookie_id" AS "user_id",

"value"->>'_type' AS "event_type",

cast("value"->>'_types' AS JSONB) AS "stack_event_types",

cast("value"->>'global_contexts' AS JSONB) AS "global_contexts",

cast("value"->>'location_stack' AS JSONB) AS "location_stack",

cast("value"->>'time' AS bigint) AS "time"

FROM "from_table___7a4057e80babeec1c65913e0a773d65d"

WHERE ("day" >= cast('2022-03-01' AS date))

),

"context_data___ba585a933c64ae895d2033a6cb58755d" AS (

SELECT "event_id" AS "event_id",

"day" AS "day",

"moment" AS "moment",

"user_id" AS "user_id",

"global_contexts" AS "global_contexts",

"location_stack" AS "location_stack",

"event_type" AS "event_type",

"stack_event_types" AS "stack_event_types"

FROM "getitem_where_boolean___ed47b05e848cac0f72b677b554950459"

),

"session_starts___6c2c77346e814a0be19ed2d6d6fd49d8" AS (

SELECT "event_id" AS "event_id",

"day" AS "day",

"moment" AS "moment",

"user_id" AS "user_id",

"global_contexts" AS "global_contexts",

"location_stack" AS "location_stack",

"event_type" AS "event_type",

"stack_event_types" AS "stack_event_types",

CASE WHEN (extract(epoch FROM (("moment") - (lag("moment", 1, cast(NULL AS TIMESTAMP WITHOUT TIME ZONE)) OVER (PARTITION BY "user_id" ORDER BY "moment" ASC NULLS LAST, "event_id" ASC NULLS LAST RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW)))) <= cast(1800 AS bigint)) THEN cast(NULL AS boolean)

ELSE cast(TRUE AS boolean)

END AS "is_start_of_session"

FROM "context_data___ba585a933c64ae895d2033a6cb58755d"

),

"session_id_and_count___292baab8cd8cf972243b6487a774e7e4" AS (

SELECT "event_id" AS "event_id",

"day" AS "day",

"moment" AS "moment",

"user_id" AS "user_id",

"global_contexts" AS "global_contexts",

"location_stack" AS "location_stack",

"event_type" AS "event_type",

"stack_event_types" AS "stack_event_types",

"is_start_of_session" AS "is_start_of_session",

CASE WHEN "is_start_of_session" THEN row_number() OVER (PARTITION BY "is_start_of_session" ORDER BY "moment" ASC NULLS LAST, "event_id" ASC NULLS LAST RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW)

ELSE cast(NULL AS bigint)

END AS "session_start_id",

count("is_start_of_session") OVER (ORDER BY "user_id" ASC NULLS LAST, "moment" ASC NULLS LAST, "event_id" ASC NULLS LAST RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW) AS "is_one_session"

FROM "session_starts___6c2c77346e814a0be19ed2d6d6fd49d8"

),

"objectiv_sessionized_data___96cf3bd3a8b620215449a055d2904277" AS (

SELECT "event_id" AS "event_id",

"day" AS "day",

"moment" AS "moment",

"user_id" AS "user_id",

"global_contexts" AS "global_contexts",

"location_stack" AS "location_stack",

"event_type" AS "event_type",

"stack_event_types" AS "stack_event_types",

"is_start_of_session" AS "is_start_of_session",

"session_start_id" AS "session_start_id",

"is_one_session" AS "is_one_session",

first_value("session_start_id") OVER (PARTITION BY "is_one_session" ORDER BY "moment" ASC NULLS LAST, "event_id" ASC NULLS LAST RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW) AS "session_id",

row_number() OVER (PARTITION BY "is_one_session" ORDER BY "moment" ASC NULLS LAST, "event_id" ASC NULLS LAST RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW) AS "session_hit_number"

FROM "session_id_and_count___292baab8cd8cf972243b6487a774e7e4"

),

"reset_index___ce84fe48ed9b1822ff795ae87ce54b36" AS (

SELECT "user_id" AS "user_id",

min("moment") AS "moment"

FROM "objectiv_sessionized_data___96cf3bd3a8b620215449a055d2904277"

GROUP BY "user_id"

),

"merge_sql___75002ce3bb7ba559699814dbcbe8245f" AS (

SELECT "l"."event_id" AS "event_id",

COALESCE("l"."user_id", "r"."user_id") AS "user_id",

"l"."moment" AS "moment",

"r"."moment" AS "first_cohort_ts"

FROM "objectiv_sessionized_data___96cf3bd3a8b620215449a055d2904277" AS l

INNER

JOIN "reset_index___ce84fe48ed9b1822ff795ae87ce54b36" AS r

ON ("l"."user_id" = "r"."user_id")

),

"reset_index___25ebe6cd511a8e5763c2ac31e210276e" AS (

SELECT to_char("first_cohort_ts", 'YYYY"-"MM') AS "first_cohort",

('_' || cast((((cast(to_char("moment", 'YYYY') AS bigint) - cast(to_char("first_cohort_ts", 'YYYY') AS bigint)) * cast(12 AS bigint)) + (cast(to_char("moment", 'MM') AS bigint) - cast(to_char("first_cohort_ts", 'MM') AS bigint))) AS text)) AS "cohort_distance",

count(DISTINCT "user_id") AS "user_id_nunique"

FROM "merge_sql___75002ce3bb7ba559699814dbcbe8245f"

GROUP BY to_char("first_cohort_ts", 'YYYY"-"MM'), ('_' || cast((((cast(to_char("moment", 'YYYY') AS bigint) - cast(to_char("first_cohort_ts", 'YYYY') AS bigint)) * cast(12 AS bigint)) + (cast(to_char("moment", 'MM') AS bigint) - cast(to_char("first_cohort_ts", 'MM') AS bigint))) AS text))

),

"unstack___3cd1a6336d6094ed7856e093183af2ff" AS (

SELECT "first_cohort" AS "first_cohort",

max("user_id_nunique") AS "user_id_nunique",

max("cohort_distance") AS "cohort_distance",

max(CASE WHEN ("cohort_distance" = '_2') THEN "user_id_nunique" ELSE cast(NULL AS bigint) END) AS "_2__user_id_nunique",

max(CASE WHEN ("cohort_distance" = '_4') THEN "user_id_nunique" ELSE cast(NULL AS bigint) END) AS "_4__user_id_nunique",

max(CASE WHEN ("cohort_distance" = '_5') THEN "user_id_nunique" ELSE cast(NULL AS bigint) END) AS "_5__user_id_nunique",

max(CASE WHEN ("cohort_distance" = '_3') THEN "user_id_nunique" ELSE cast(NULL AS bigint) END) AS "_3__user_id_nunique",

max(CASE WHEN ("cohort_distance" = '_0') THEN "user_id_nunique" ELSE cast(NULL AS bigint) END) AS "_0__user_id_nunique",

max(CASE WHEN ("cohort_distance" = '_1') THEN "user_id_nunique" ELSE cast(NULL AS bigint) END) AS "_1__user_id_nunique"

FROM "reset_index___25ebe6cd511a8e5763c2ac31e210276e"

GROUP BY "first_cohort"

) SELECT "first_cohort" AS "first_cohort",

((cast("_0__user_id_nunique" AS double precision) / "_0__user_id_nunique") * cast(100 AS bigint)) AS "_0",

((cast("_1__user_id_nunique" AS double precision) / "_0__user_id_nunique") * cast(100 AS bigint)) AS "_1",

((cast("_2__user_id_nunique" AS double precision) / "_0__user_id_nunique") * cast(100 AS bigint)) AS "_2",

((cast("_3__user_id_nunique" AS double precision) / "_0__user_id_nunique") * cast(100 AS bigint)) AS "_3",

((cast("_4__user_id_nunique" AS double precision) / "_0__user_id_nunique") * cast(100 AS bigint)) AS "_4",

((cast("_5__user_id_nunique" AS double precision) / "_0__user_id_nunique") * cast(100 AS bigint)) AS "_5"

FROM "unstack___3cd1a6336d6094ed7856e093183af2ff"

ORDER BY "first_cohort" ASC NULLS LAST

This enables you to go straight from modeling to building a dashboard in the BI-tool of your choice.

In the screenshot below we show an example Metabase setup where we simply use the resulting SQL export directly in a dashboard chart, running on the same, full dataset we used to build the analysis:

Using SQL from a pre-built model directly for a chart in Metabase.

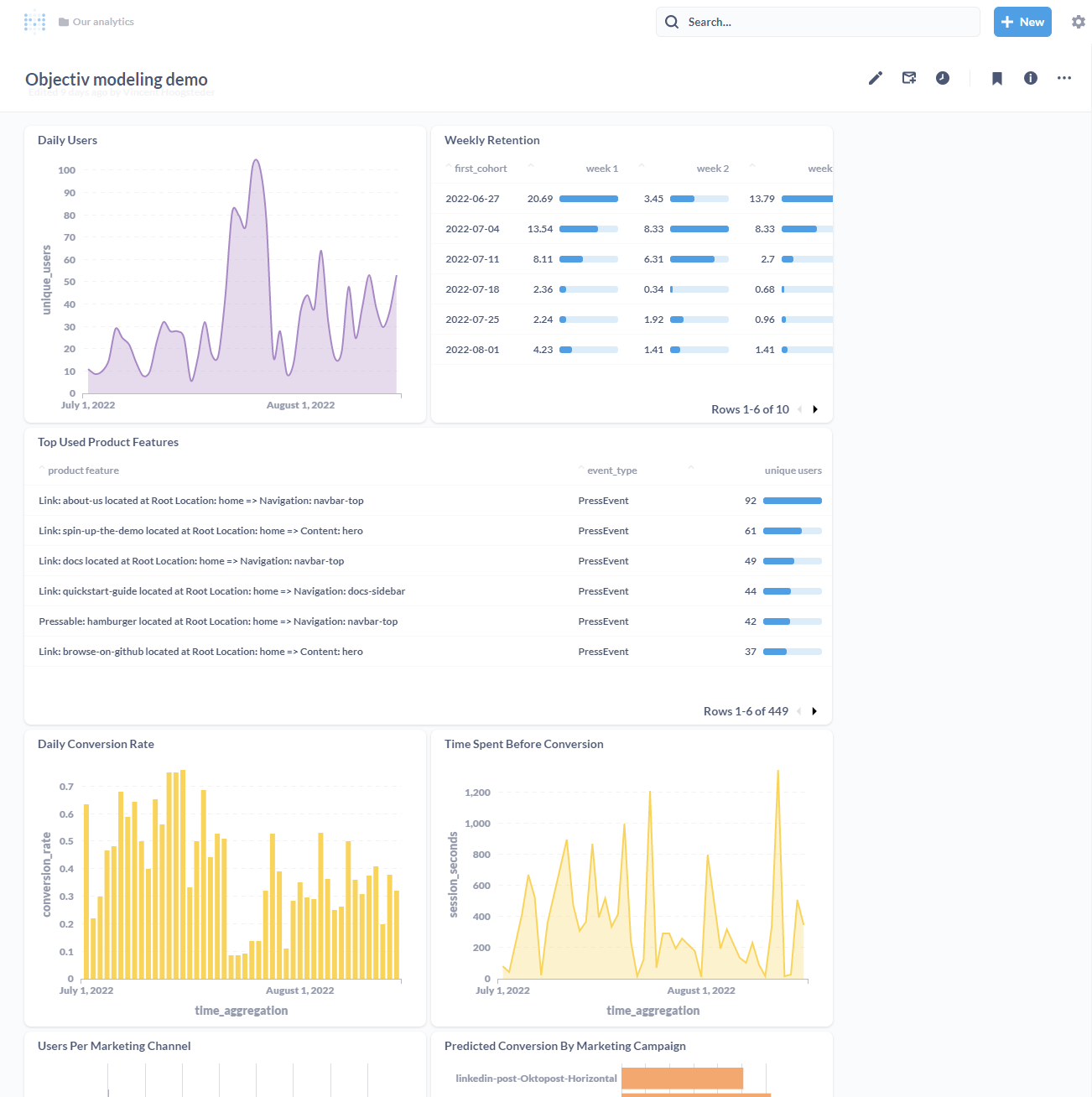

The screenshot below shows an example of a full dashboard we built in just a few minutes, using only pre-built models from the open model hub:

Example BI dashboard built with models from the open model hub.

See our Demo to try it out

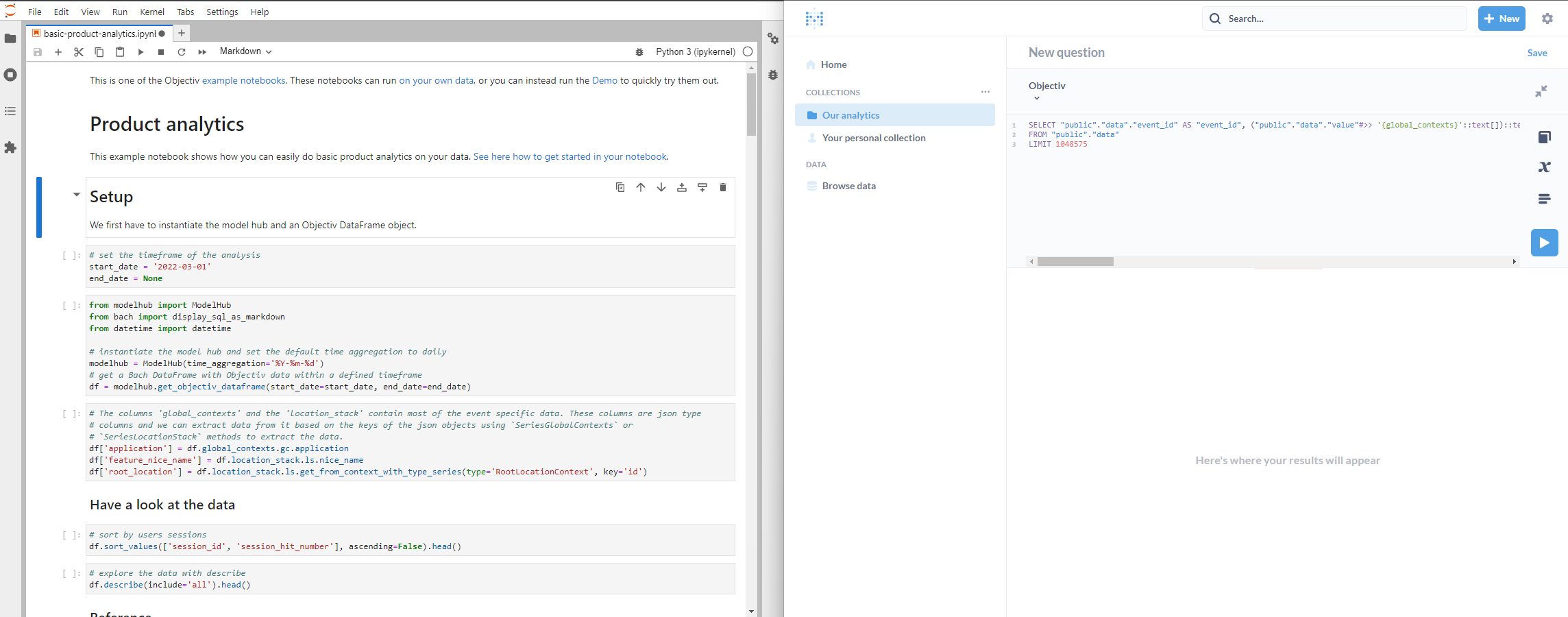

You can test all of this easily by running the Docker-based Demo that you can download and spin up in just a few minutes. It includes SQL export instructions for every notebook, and ships with an instance of open-source BI software Metabase, so you can use it end-to-end to build dashboards.

The Demo with one of the Jupyter notebooks and a Metabase instance.

How to get it

Install the modelhub/Bach packages from PyPI:

pip install objectiv-modelhub

Or upgrade if you’ve already installed it:

pip install --upgrade objectiv-modelhub

To try it out in a lightweight setup, see how to run the Docker-based demo in the quickstart guide.

Office Hours

If you have any questions about this release or anything else, or if you just want to say 'Hi!' to team Objectiv, we have Office Hours every Thursday at 4pm CET, 10am EST that you can freely dial in to. If you're in a timezone that doesn’t fit well, just ping us on Slack and we'll send over an invite for a better moment.